Professor ponders the implications of autonomous vehicles

Self-driving cars are coming. Soon. That means speeding tickets might be a thing of the past. Meditating while “driving” might be the next new thing.

There’s just one catch: to get there, we might need to let these technological marvels kill us sometimes.

At least that is the argument offered by some researchers working on the thornier implications of autonomous vehicles. Unavoidable accidents will happen, they say. The car needs to know what to do when faced with the choice of running down Grandma Betty or little Bobby.

But Nassim JafariNaimi, a professor at the Georgia Institute of Technology, argues the idea that self-driving cars must be programmed with “kill scripts” is wrong. What may be worse, she argues, it is a total failure of imagination.

In a paper published in the March edition of the journal Science, Technology, and Human Values, JafariNaimi is sounding the alarm over what she sees as too narrow a focus on programming cars to respond automatically in face of unavoidable accidents, as well as the potential for over-reliance on possibly flawed and biased algorithms.

“Everybody’s taking these ideas as given without challenging them, and that's the scary part,” said JafariNaimi, a profesor in the School of Literature, Media, and Communications who studies the ethical and political dimensions of design and technology. “I want to shake up these assumptions.”

The school is a unit of the Ivan Allen College of Liberal Arts.

The Trolley Problem

Some argue such cars should protect their occupants at all costs. Others argue for the principle of least harm, saying one death is better than five. Some say it is better to spare a child with a full life ahead than a senior citizen.

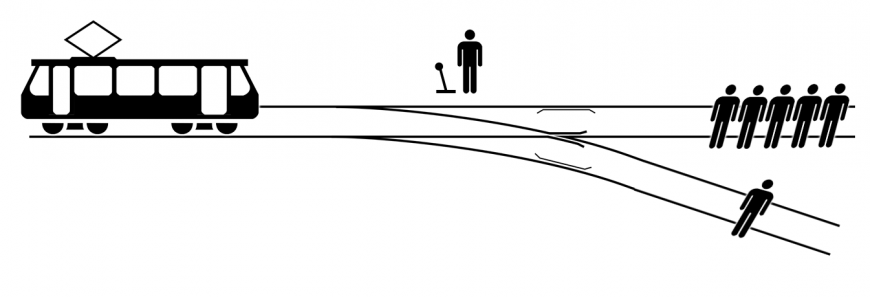

Among ethicists thinking about self-driving cars, this quandary is reminiscent of the “trolley problem,” a classic ethics thought experiment that some argue can serve as the template for what is called algorithmic morality.

One variation of the Trolley Problem goes like this: An out-of-control trolley is barreling down the tracks towards five people who may not see or hear it, or will not be able to get out of the way in time. You are near a switch that would move the trolley to another track, where only one person would die. What do you do? What if you could stop the streetcar by pushing a fat man onto the tracks? What would you do then?

By McGeddon (Own work) [CC BY-SA 4.0], via Wikimedia Commons

Ethical Situations Aren’t ‘Frozen in Time’

In her recent paper, Our Bodies in the Trolley’s Path, or Why Self-driving Cars Must *Not* Be Programmed to Kill, JafariNaimi suggests the focus on the trolley problem and its variants leaves out a whole constellation of issues and possibilities.

“Literal readings of the trolley experiments mask the deep sense of uncertainty that is characteristic of ethical situations by placing us outside the problematic situation that they envision, proffering a false sense of clarity about choices and outcomes,” she wrote in her paper.

“Ethical situations cannot be captured by clear choices frozen in time,” she wrote. “They are rather, uncertain, organic, and living developments.”

A Different Vision

Instead of focusing on programming vehicles, JafariNaimi suggests that we take this watershed moment of technological change as an opportunity to rethink our entire urban landscape.

In her paper, she proposes that “a genuine caring concern for the many lives lost in car accidents now and in the future—a concern that transcends false binary trade-offs and that recognizes the systemic biases and power structures that make certain groups more vulnerable than others—could serve as a starting point to rethink mobility as it connects to the design of our cities, the well-being of our communities, and the future of our planet.”

What that redesign might look like is something JafariNaimi has not done—but one thing is clear: it involves system-level thinking about the future of mobility as opposed to the current trend of investing heavily in artificial intelligence designed to optimize decisions about who lives and who dies in an accident.

Meaningful solutions would take intensive work by a broadly interdisciplinary group with expertise in engineering, urban and interaction design but also experts with public policy and social science perspectives, JafariNaimi said.

“The livability of our cities is at stake and we need all we know to be brought to bear on this issue,” she said.

The Ghost in the Machine

Beyond the issue of urban infrastructure, though, JafariNaimi has a deep concern about the kind of algorithmic morality being discussed as a solution to the ethical problems surrounding self-driving cars.

Algorithms are often seen as more neutral and less fallible than human intuition. However, they are inherently biased, JafariNaimi said.

Take, for instance, facial recognition algorithms: they doesn’t always work well because data sets used to train such systems may be dominated by one skin tone or lack labels describing different characteristics of skin and hair.

Smart city technologies, on which self-driving cars will heavily rely, can have similar pitfalls. In a 2014 report to the White House, a panel convened to examine big data brought up the possibility of unintended consequences from an experimental app in Boston that used smartphone accelerometer and GPS data to notify public works crews about potholes.

The downside? The poor and older people are less likely to have sophisticated smart phones, so such efforts could have funneled city resources to wealthier areas had the city not recognized the issue and taken steps to correct it, the White House task force noted.

Life or Death: Whose Choice?

JafariNaimi worries similar biases might creep into algorithms created to govern how autonomous cars act when lives are on the line. For instance, cars might target the elderly disproportionately because they have fewer years ahead of them compared to children.

Even if we deal with issues of bias, the situations presented by rapidly unfolding accident scenarios are too complex for machines to handle, she argues.

She asks us to imagine that we reach agreement that the principle of maximizing life should guide self-driving vehicles. Yet even that clear-eyed principle is too difficult for an algorithm to execute, she said. For instance, how will it pick between a slow-moving elderly person and four teenagers, who may be more swift and capable of dodging the oncoming car?

In the case of the trolley problem, what if the group of five on the tracks are suicidal? What if the fat man knows how to stop the train?

“Figuring out what maximizing life is in each case requires deliberation, and that’s what algorithms cannot do,” JafariNaimi wrote in her paper.

Ultimately, she said, the decision to decide who lives and dies is too complex and not one that can be based on a moral calculus.

“Who is anyone to say whose life is worth more?” she said.

About Nassim JafariNaimi

Nassim JafariNaimi is an Assistant Professor in the Digital Media program at Georgia Tech and the director of the Design and Social Interaction Studio. She received her Ph.D. in Design from Carnegie Mellon University. She also holds an M.S. in Information Design and Technology from the Georgia Institute of Technology and a B.S. in Electrical Engineering from the University of Tehran, Iran.

JafariNaimi is the recipient of multiple awards for her research and teaching, including the 2017 GATECH CETL/BP Junior Faculty Teaching Excellence Award and the Ivan Allen College of Liberal Arts (IAC) Teacher of the Year Award in 2016.